COVID-19 Sounds App

< 研究进展

Voice and speech as predictors of COVID-19

February 11, 2021 — Voice-operated AI assistants and smart speakers are increasingly gaining popularity while voice features are emerging biomarkers in the biomedical literature, lately. Building on our previous work where we studied respiratory sounds, we now present our new findings on the analysis of voice for the detection of potential COVID-19 infection. The initial results are described in a paper accepted for publication at the leading acoustics and signal processing conference IEEE ICASSP 2021.

In this study, we analyse a subset of 343 participants and investigated how the voice can be used to distinguish symptomatic positive-tested individuals, from negative-tested individuals, who also have developed symptoms akin to COVID-19.

Findings

We first compare the differences of 11 common symptoms between the two groups. It appears that loss of smell or taste was more frequently reported among positive participants than negative ones, while there were no significant differences across the rest reported symptoms. This helps to confirm that, despite being totally crowdsourced, our data aligns with the general findings on COVID-19 symptoms.

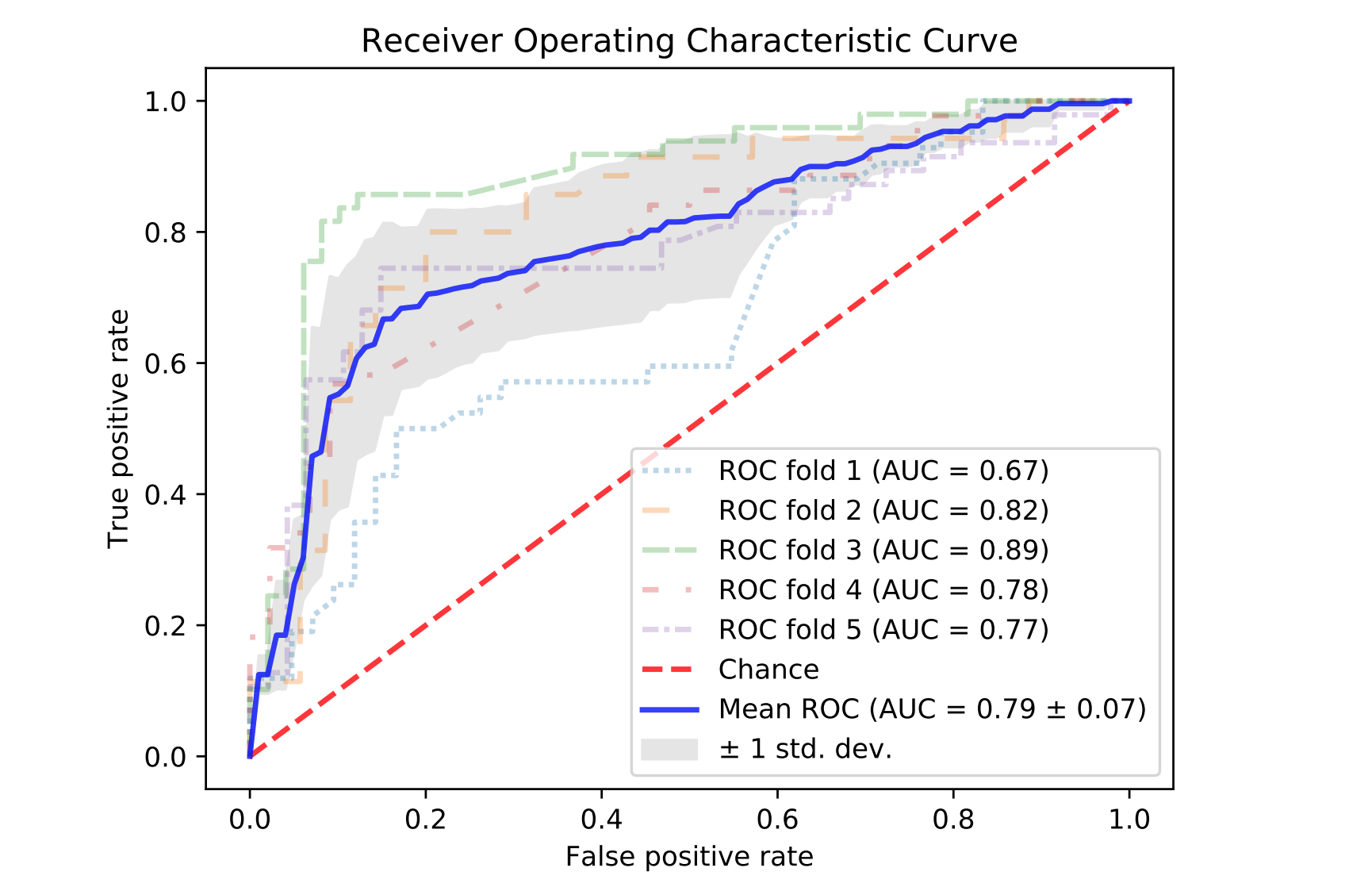

For each voice sample, we extracted 384 hand-crafted features common in acoustic and speech analysis and conducted experiments to detect COVID-19 using voice data only, as well as the combination of voice with symptoms. In particular, for the task of distinguishing symptomatic COVID-19 patients from non-COVID-19 controls who also developed similar symptoms, our voice-only model achieved a performance of 77% (area under the ROC curve). Moreover, when taking the symptoms into account, the performance increased to 79% in a five-fold user-independent cross-validation (ROC curves are shown below).

The sensitivity and specificity were also improved, showing the promise of combining voice and symptoms. However, when distinguishing asymptomatic patients from healthy controls, we observe a noticeable performance decrease, i.e., a high rate of asymptomatic patients were misclassified as healthy participants. This implied that, with the current features and model, it was difficult to identify asymptomatic patients just from their voice, which was also in alignment with recent findings on different data1, 2.

Code and data sharing

We are also in the process of developing multimodal deep learning models which take into account all audio modalities (cough, breathing, and speech) towards showing their effect on personalized recommendations based on our longitudinal data. We are continually updating our models with the latest data as it is coming along, and we are glad to announce that since last November when we posted our first numbers update, we now have a total of around 65.000 sound recordings from 32.000 participants. We also open-source our code for the analyses and the data collection apps on Github.

To give back to the community, we offered our data to the 13th ComParE challenge at the Interspeech 2021 conference, with two tasks (COVID-19 Cough, COVID-19 Speech). The challenge launched on Feb 4th, 2021 and aims to bring together researchers interested in investigating how human sounds (respiration and voice) could be explored to help the automatic diagnosis and screening of COVID-19.

Contribute to vital COVID-19 research

Last, our data collection is still ongoing since we are very excited about the longitudinal data we collect over time and we hope to be able to share more soon. COVID-19 is a new disease that we do not know much about, and as such, every new datapoint is valuable for research. To this end, you can download the Android and iOS applications.

How to cite our paper

Jing Han, Chloë Brown*, Jagmohan Chauhan*, Andreas Grammenos*, Apinan Hasthanasombat*, Dimitris Spathis*, Tong Xia*, Pietro Cicuta, and Cecilia Mascolo. "Exploring Automatic COVID-19 Diagnosis via Voice and Symptoms from Crowdsourced Data." arXiv preprint:2102.05225 (2021)

[PDF]. To appear at the proceedings of IEEE ICASSP 2021.

*equal contribution, alphabetical order

[1] M. Ismail, S. Deshmukh, R. Singh, “Detection of COVID-19 through the analysis of vocal fold oscillations”, in IEEE international conference on acoustics, speech and signal processing (ICASSP), to appear, 2021.

[2] HP. Bagad, A. Dalmia, J. Doshi, A. Nagrani, P. Bhamare, A. Mahale, S. Rane, N. Agarwal, R. Panicker, “Cough against COVID: Evidence of COVID-19 signature in cough sounds,” arXiv preprint arXiv:2009.08790, 2020.