COVID-19 Sounds App

< Research Updates

Detecting potential COVID-19 with AI and respiratory sounds

July 20, 2020 — We are developing pre-screening models that are able to indicate if someone is likely to have COVID-19, based solely on sounds recorded by individuals (e.g. coughing and breathing on a smartphone). The initial results are described in a paper accepted for publication at the leading data science and AI conference ACM KDD 2020 (Health Day).

Our AI models use data from the COVID-19 Sounds study app which we launched back in April and attracted thousands of users from all over the world to whom we are really grateful. The app collects symptoms and medical history information and prompts the users every couple of days to record their coughing, breathing and voice, while reading a sentence displayed on the screen. The models are able to predict the infection of COVID-19 without requiring the need for a traditional test (swab or blood). Instead, based on indications of audible respiratory changes in the voice of patients such as dryer coughing, here we leverage sound data to train predictive models.

In the study published in ACM KDD, we first analyzed the overlap of symptoms between positive and non-tested users which showed that wet and dry cough, as well as the loss of taste or smell, are the most common ones, validating previous research1. We carefully selected two groups of users: those tested positive for COVID-19 (in the last 14 days or before that), and a control group making sure that they come from countries with a low prevalence of the virus at the time of writing, and they have no medical history or symptoms.

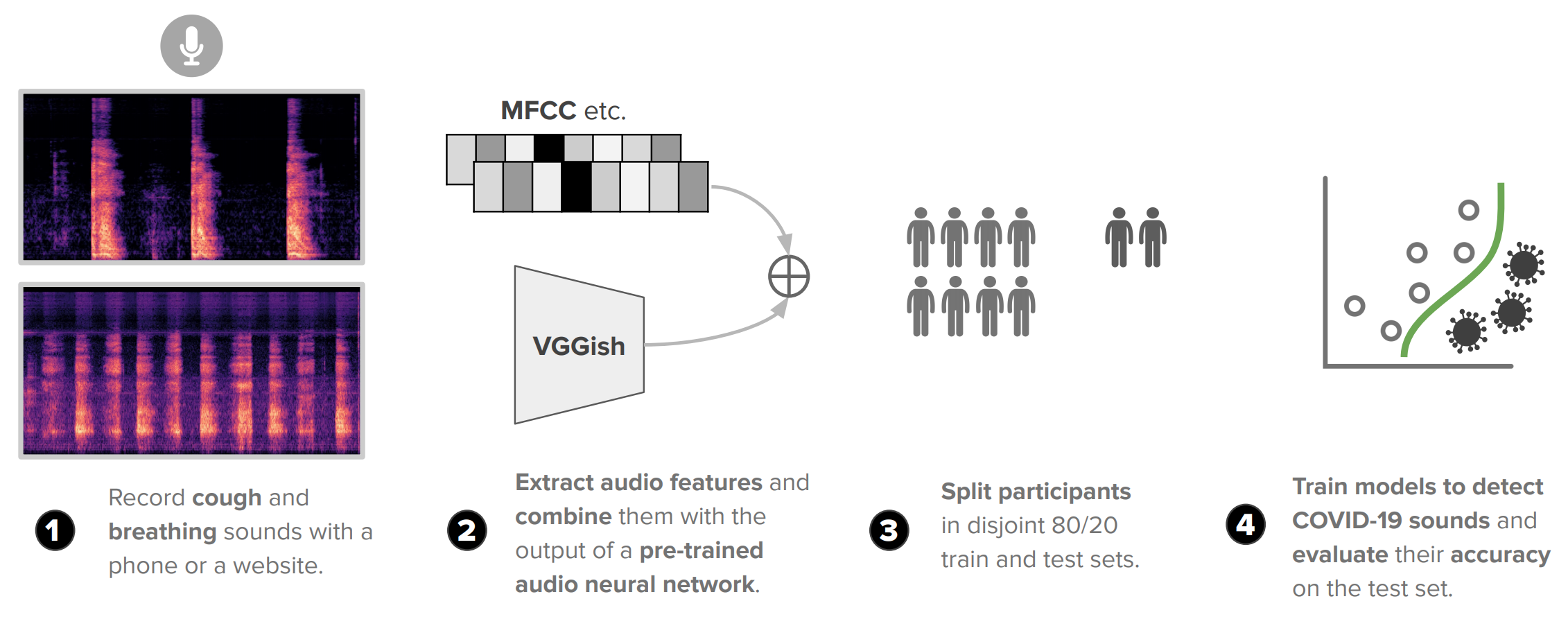

We then analyzed the sounds of coughing and breathing using frequency-based variables that are common in signal and sound processing (e.g. MFCC) as well as information (embeddings ) extracted from a convolutional neural network trained by Google on millions of diverse sounds 2. These fingerprints capture universal audio information and encode nuances or patterns that cannot be easily handcrafted. In order to make sure that our models can generalize we split the users into 80/20 train and test sets and employed widely used machine learning models such as Support Vector Machines (SVM). An overview of our methods can be seen in the figure above.

Our results showed that the combination of breath and cough can predict COVID-19 with nearly 80% accuracy (area under the curve, AUC). Also, given the potential difficulty of distinguishing a healthy cough from a COVID-19 cough, we conducted more experiments on a smaller sample. We compared healthy and asthmatic users with a cough, against COVID-19 users with a cough. The results in both cases reach 80% accuracy as well. In all cases, the combination of the pre-trained model with the handcrafted variables showed the best results.

These results only scratch the surface of the potential of this type of data; while our results are a positive signal, they are not yet robust enough to constitute a standalone screening tool. Also, we have, for the moment, limited ourselves to the use of a small subset of all the data collected, to manage the fact that the proportion of COVID-19 positive reported users is considerably smaller than the rest of the users.

Our team is now focused on leveraging the larger dataset in our analysis, using extra inputs such as the voice sounds, modelling the progression trajectories of the sounds as symptoms change over time, as well as better tooling for data quality assurance, given the crowdsourced nature of the experiment.

That's all? No! Our data collection is still ongoing as we recently launched an iOS application for Apple devices. As mentioned above, we are very excited about the longitudinal data we collect over time and we hope to be able to share results soon. COVID-19 is a new disease that we do not know much about, and as such, every new datapoint is valuable for research. To this end, you can download the Android and iOS applications.

How to cite our paper

Chloë Brown*, Jagmohan Chauhan*, Andreas Grammenos*, Jing Han*,

Apinan Hasthanasombat*, Dimitris Spathis*, Tong Xia*, Pietro Cicuta, and Cecilia Mascolo. "Exploring

Automatic Diagnosis of COVID-19 from Crowdsourced Respiratory Sound Data." Proceedings of the 26th ACM

SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD 2020)

[PDF].

*equal contribution, alphabetical order

[1] Menni, Cristina, Ana M. Valdes, Maxim B. Freidin, Carole H. Sudre, Long H.

Nguyen, David A. Drew, Sajaysurya Ganesh et al. "Real-time tracking of self-reported symptoms to predict

potential COVID-19." Nature Medicine (2020): 1-4.

[2] Hershey, Shawn, Sourish Chaudhuri, Daniel PW Ellis, Jort F. Gemmeke, Aren Jansen, R. Channing Moore,

Manoj Plakal et al. "CNN architectures for large-scale audio classification." IEEE international

conference on acoustics, speech and signal processing (ICASSP) (2017).